It’s 2019, and the majority of the ML community is finally publicly acknowledging the prevalence and consequences of bias in ML models. For years, dozens of reports by organizations such as ProPublica and the New York Times have been exposing the scale of algorithmic discrimination in criminal risk assessment, predictive policing, credit lending, hiring, and more. Knowingly or not, we as ML researchers and engineers have not only become complicit in a broader sociopolitical project that perpetuates hierarchy and exacerbates inequality, but are now actively responsible for disproportionate prison sentencing of black people and housing discrimination against communities of color.

Acknowledgement of this bias cannot be where the conversation ends. I have argued, and continue to argue, that even the individual ML engineer has direct agency in shaping fairness in these automated systems. Bias may be a human problem, but amplification of bias is a technical problem — a mathematically explainable and controllable byproduct of the way models are trained. Consequently, mitigation of existing bias is also a technical problem: how, algorithmically, can we ensure that the models we build are not reflecting and magnifying human biases in data?

Unfortunately, it is not always immediately possible to collect “better training data.” In this post, I give an overview of the following algorithmic approaches to mitigating bias, which I hope can be useful for the individual practitioner who wants to take action:

adversarial de-biasing of models through protection of sensitive attributes,

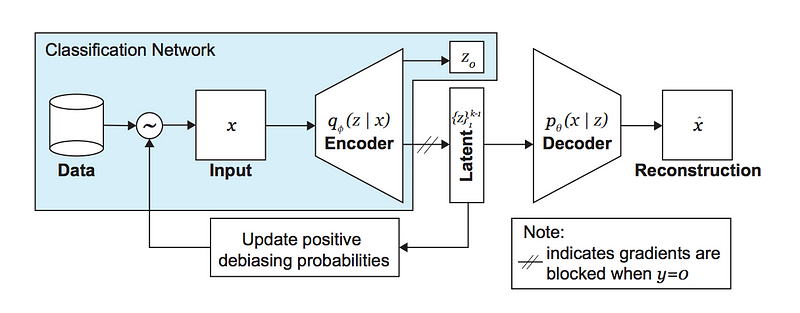

encoding invariant representations with semi-supervised, variational “fair” autoencoders,

dynamic upsampling of training data based on learned latent representations, and

preventing disparity amplification through distributionally robust optimization.

I do my best to link to libraries/code/tutorials/resources throughout, but if you’re looking to dive right in with code, the AI 360 toolkit looks like a decent place to start. Meanwhile, let’s get started with the math

.

I. Adversarial De-biasing

The technique of adversarial de-biasing is currently one of the most popular techniques to combat bias. It relies on adversarial training to remove bias from latent representations learned by the model.

Let Z be some sensitive attribute that we want to prevent our algorithm from discriminating on, e.g. age or race. It is typically insufficient to simply remove Z from our training data, because it is often highly correlated with other features. What we really want is to prevent our model from learning a representation of the input that relies on Z in any substantial way. To this end, we train our model to simultaneously predict the label Y and prevent a jointly-trained adversary from predicting Z.

The intuition is as follows: if our original model produces a representation of X that primarily encodes information about Z (e.g. race), an adversarial model could easily recover and predict Z using that representation. By the contrapositive, if the adversary fails to recover any information about Z, then we must have successfully learned a representation of the input that is not substantially dependent on our protected attribute.

We can think of our model as a multi-head deep neural net with one head for predicting Y and another head for predicting Z. We backpropogate normally, except we send back a negative signal on the head that predicts Z by using the negative gradient.

For More:

https://towardsdatascience.com/algorithmic-solutions-to-algorithmic-bias-aef59eaf6565